I'm continuing my series of essays on the future of news; this essay may look like a serious digression, but trust me, it's not. The future of news, in my opinion, is about cooperation; it's about allowing multiple voices to work together on the same story; it's about allowing users to critically evaluate different versions of the story to evaluate which is more trustworthy. This essay introduces some of the technical underpinnings that may make that possible.

In January 2015, I ran a workshop in Birnam on Land Reform. While planning the workshop, I realised I would need a mechanism for people who had attended the workshop to work cooperatively on the document which was the output of the event. So, obviously, I needed a Wiki. I could have put the wiki on a commercial Wiki site like Wikia, but if I wanted to control who could edit it I'd have to pay, and in any case it would be plastered with advertising.

So I decided to install a Wiki engine on my own server. I needed a Wiki engine which was small and easy to manage; which didn't have too many software dependencies; which was easy to back up; and on which I could control who could edit pages. So I did a quick survey of what was available.

Wiki engines store documents, and versions of those documents. As many people work on those documents, the version history can get complex. Most Wiki engines store their documents in relational databases. Relational databases are large, complex bits of software, and when they need to be upgraded it can be a pain. Furthermore, relational databases are not designed to store documents; they're at there best with fixed size data records. A Wiki backed by a relational database works, but it isn't a good fit.

Software is composed of documents too - and the documents of which software is composed are often revised many, many times. In 2012 there were 37,000 documents, containing 15,004,006 lines of code (it's now over twenty million lines of code). It's worked on by over 1,000 developers, many of whom are volunteers. Managing all that is a considerable task; it needs a revision control system.

In the early days, when Linus Torvalds, the original author of Linux, was working with a small group of other volunteers on the early versions of the kernel, revision control was managed in the revision control system we all used back in the 1980s and 90s: the Concurrent Versions System, or CVS. CVS has a lot of faults but it was a good system for small teams to use. However, Linux soon outgrew CVS, and so the kernel developers switched to a proprietary revision control system, Bitkeeper, which the copyright holders of that system allowed them to use for free.

However, in 2005, the copyright holders withdrew their permission to use Bitkeeper, claiming that some of the kernel developers had tried to reverse engineer it. This caused a crisis, because there was then no other revision control system then available sophisticated enough to manage distributed development on the scale of the Linux kernel.

What makes Linus Torvalds one of the great software engineers of all time is what he did then. He sat down on 3rd April 2005 to write a new revision control system from scratch, to manage the largest software development project in the world. By the 29th, he'd finished, and Git was working.

Git is extremely reliable, extremely secure, extremely sophisticated, extremely efficient - and at the same time, it's small. And what it does is manage revisions of documents. A Wiki must manage revisions of documents too - and display them. A Wiki backed with git as a document store looked a very good fit, and I found one: a thing called Gollum.

Gollum did everything I wanted, except that it did not authenticate users so that I couldn't control who could edit documents. Gollum is written in a language called Ruby, which I don't like. So I thought about whether it was worth trying to write an authentication module in Ruby, or simply start again from scratch in a language I do like - Clojure.

I started working on my new wiki, Smeagol, on 10th November 2014, and on the following day it was working. On the 14th, it was live on the Internet, acting as the website for the Birnam workshop.

It was, at that stage, crude. Authentication worked, but the security wasn't very good. The edit screen was very basic. But it was good enough. I've done a bit more work on it in the intervening time; it's now very much more secure, it shows changes between different versions of a document better (but still not as well as I'd like), and it has one or two other technically interesting features. It's almost at a stage of being 'finished', and is, in fact, already used by quite a lot of other people around the world.

But Smeagol exploits only a small part of the power of Git. Smeagol tracks versions of documents, and allows them to be backed up easily; it allows multiple people to edit them. But it doesn't allow multiple concurrent versions of the same document; in Git terms, it maintains only one branch. And Smeagol currently allows you to compare a version of the document only with the most recent version; it doesn't allow you to compare two arbitrary versions. Furthermore, the format of the comparison, while, I think, adequately clear, is not very pretty. Finally, because it maintains only one branch, Smeagol has no mechanism to merge branches.

But: does Smeagol - that simple Wiki engine I whipped up in a few days to solve the problem of collaboratively editing a single document - hold some lessons for how we build a collaborative news system?

Yes, I think it does.

The National publishes of the order of 120 stories per issue, and of the order of 300 issues per year. That's 36,000 documents per year, a volume on the scale of the Linux kernel. There are a few tens of blogs, each with with at most a few tens of writers, contributing to Scottish political blogging; and at most a few hundred journalists.

So a collaborative news system for Scottish news is within the scale of technology we already know how to handle. How much further it could scale, I don't know. And how it would cope with federation I don't yet know - that might greatly increase the scaling problems. But I will deal with federation in another essay later.

Friday, 25 August 2017

Trust me

Reality is contested, and it is an essential part of reality that reality is contested. There are two reasons that reality is contested: one is, obviously, that people mendaciously misreport reality in order to deceive. That's a problem, and it's quite a big one; but (in my opinion) it pales into insignificance beside another. Honest people honestly perceive reality differently.

If you interview three witnesses to some real life event, you'll get three accounts; and its highly likely that those accounts will be - possibly sharply - different. That doesn't mean that any of them are lying or are untrustworthy, it's just that people perceive things differently. They perceive things differently partly because they have different viewpoints, but also because they have different understandings of the world.

Furthermore, there isn't a clear divide between an honest account from a different viewpoint and outright propaganda; rather, there's a spectrum. The conjugation is something like this:

A digression. I am mad. I say this quite frequently, but because most of the time I seem quite lucid I think people tend not to believe me. But one of the problems of being mad - for me - is that I sometimes have hallucinations, and I sometimes cannot disentangle in my memory what happened in dreams from what happened in reality. So I have to treat myself as an unreliable witness - I cannot even trust my own accounts of things which I have myself witnessed.

I suspect this probably isn't as rare as people like to think.

Never mind. Onwards.

The point of this, in constructing a model of news, is we don't have access to a perfect account of what really happened. We have at best access to multiple contested accounts. How much can we trust them?

Among the trust issues on the Internet are

If I know someone in real life, I can answer the question 'is this person who they say they are'. If the implementation of the web of trust allows me to tag the fact that I know this person in real life, then, if you trust me, then you have confidence this person exists in real life.

Obviously, if I don't know someone in real life, then I can still assess their trustworthiness through my evaluation of the information they post, and in scoring that evaluation I can allow the system to model the degree of my trust for them on each of the different subjects on which they post. If you trust my judgement of other people's trustworthiness, then how much I trust them affects how much you trust them, and the system can model that, too.

However, the web of trust has limits. If thirty users of a system all claim to know one another in real life, but none (or only one) of them are known in real life by anyone outside the circle, they may be, for example, islanders on a remote island. But they may also all be sock-puppets operated by the same entity.

Also, a news system based in the southern United States, for example, will have webs of trust biased heavily towards creationist worldviews. So an article on, for example, a new discovery of a dinosaur fossil which influences understanding of the evolution of birds is unlikely to be scored highly for trustworthiness on that system.

I still think the web of trust is the best technical aid to assessing the trustworthiness of an author, but there are some other clues we can use.

There is an interesting thread on Twitter this morning about a user who is alleged to be a Russian disinformation operative. The allegation is based (in part) on the claim that the user posts to Twitter only between 08:00 and 20:00 Moscow time. Without disputing other elements of the case, that point seems to me weak. The user may be in Moscow; or may be (as he claims) in Southampton, but a habitual early riser.

But modern technology allows us to get the location of the device from which a message was sent. If Twitter required that location tracking was enabled (which it doesn't, and, I would argue, shouldn't), then a person claiming to be tweeting from one location using a device in another location would be obviously less trustworthy.

There are more trust cues to be drawn from location. If the location from which a user communicates never moves, then that's a cue. Of course, the person may be housebound, for example, so it's not a strong cue. Equally, people may have many valid reasons for choosing not to reveal their location; but simply having or not having a revealed location is a cue to trustworthiness. Of course, location data can be spoofed. It should never be trusted entirely; it is only another clue to trustworthiness.

Allegedly also, the user claims to have a phone number which is the same as a UKIP phone number in Northern Ireland.

Databases are awfully good at searching for identical numbers. If multiple users all claim to have the same phone number, that must reduce their presumed trustworthiness, and their presumed independence from one another. Obviously, a system shouldn't publish a user's phone number (unless they give it specific permission to do so, which I think most of us wouldn't), but if they have a verified, distinct phone number known to the system, that fact could be published. If they enabled location sharing on the device with that phone number, and their claimed posting location was the same as the location reported by the device, then that fact could be reported.

Another digression; people use different identities on different internet systems. Fortunately there are now mechanisms which allow those identities to be tied together. For example, if you trust what I post as simon_brooke on Twitter, you can look me up on keybase.io and see that simon_brooke on Twitter is the same person as simon-brooke on GitHub, and also controls the domain journeyman.cc, which you'll note is the domain of this blog.

So webs of trust can extend across systems, provided users are prepared to tie their Internet identities together.

If you interview three witnesses to some real life event, you'll get three accounts; and its highly likely that those accounts will be - possibly sharply - different. That doesn't mean that any of them are lying or are untrustworthy, it's just that people perceive things differently. They perceive things differently partly because they have different viewpoints, but also because they have different understandings of the world.

Furthermore, there isn't a clear divide between an honest account from a different viewpoint and outright propaganda; rather, there's a spectrum. The conjugation is something like this:

- I told the truth

- You may have been stretching the point a little

- He overstated his case

- They were lying

A digression. I am mad. I say this quite frequently, but because most of the time I seem quite lucid I think people tend not to believe me. But one of the problems of being mad - for me - is that I sometimes have hallucinations, and I sometimes cannot disentangle in my memory what happened in dreams from what happened in reality. So I have to treat myself as an unreliable witness - I cannot even trust my own accounts of things which I have myself witnessed.

I suspect this probably isn't as rare as people like to think.

Never mind. Onwards.

The point of this, in constructing a model of news, is we don't have access to a perfect account of what really happened. We have at best access to multiple contested accounts. How much can we trust them?

Among the trust issues on the Internet are

- Is this user who they say they are?

- Is this user one of many 'sock puppets' operated by a single entity?

- Is this user generally truthful?

- Does this user have an agenda?

Clues to trustworthiness

I wrote in the CollabPRES essay about webs of trust. I still think webs of trust are the best way to establish trustworthiness. But there have to be two parallel dimensions to the web of trust: there's 'real-life' trustworthiness, and there's reputational - online - trustworthiness. There are people I know only from Twitter whom nevertheless I trust highly; and there are people I know in real life as real people, but don't necessarily trust as much.If I know someone in real life, I can answer the question 'is this person who they say they are'. If the implementation of the web of trust allows me to tag the fact that I know this person in real life, then, if you trust me, then you have confidence this person exists in real life.

Obviously, if I don't know someone in real life, then I can still assess their trustworthiness through my evaluation of the information they post, and in scoring that evaluation I can allow the system to model the degree of my trust for them on each of the different subjects on which they post. If you trust my judgement of other people's trustworthiness, then how much I trust them affects how much you trust them, and the system can model that, too.

|

| Alleged follower network of Twitter user DavidJo52951945, alleged to be a Russian sock-puppet |

Also, a news system based in the southern United States, for example, will have webs of trust biased heavily towards creationist worldviews. So an article on, for example, a new discovery of a dinosaur fossil which influences understanding of the evolution of birds is unlikely to be scored highly for trustworthiness on that system.

I still think the web of trust is the best technical aid to assessing the trustworthiness of an author, but there are some other clues we can use.

|

| Alleged posting times of DavidJo52951945 |

But modern technology allows us to get the location of the device from which a message was sent. If Twitter required that location tracking was enabled (which it doesn't, and, I would argue, shouldn't), then a person claiming to be tweeting from one location using a device in another location would be obviously less trustworthy.

There are more trust cues to be drawn from location. If the location from which a user communicates never moves, then that's a cue. Of course, the person may be housebound, for example, so it's not a strong cue. Equally, people may have many valid reasons for choosing not to reveal their location; but simply having or not having a revealed location is a cue to trustworthiness. Of course, location data can be spoofed. It should never be trusted entirely; it is only another clue to trustworthiness.

Allegedly also, the user claims to have a phone number which is the same as a UKIP phone number in Northern Ireland.

Databases are awfully good at searching for identical numbers. If multiple users all claim to have the same phone number, that must reduce their presumed trustworthiness, and their presumed independence from one another. Obviously, a system shouldn't publish a user's phone number (unless they give it specific permission to do so, which I think most of us wouldn't), but if they have a verified, distinct phone number known to the system, that fact could be published. If they enabled location sharing on the device with that phone number, and their claimed posting location was the same as the location reported by the device, then that fact could be reported.

Another digression; people use different identities on different internet systems. Fortunately there are now mechanisms which allow those identities to be tied together. For example, if you trust what I post as simon_brooke on Twitter, you can look me up on keybase.io and see that simon_brooke on Twitter is the same person as simon-brooke on GitHub, and also controls the domain journeyman.cc, which you'll note is the domain of this blog.

So webs of trust can extend across systems, provided users are prepared to tie their Internet identities together.

The values (and limits) of anonymity

Many people use pseudonyms on the Internet; it has become accepted. It's important for news gathering that anonymity is possible, because news is very often information that powerful interests wish to suppress or distort; without anonymity there would be no whistle-blowers and no leaks; and we'd have little reliable information from repressive regimes or war zones.

So I don't want to prevent anonymity. Nevertheless, a person with a (claimed) real world identity is more trustworthy than someone with no claimed real world identity, a person with an identity verified by other people with claimed real world identities is more trustworthy still, and a person with a claimed real world identity verified by someone I trust is yet more trustworthy.

So if I have two stories from the siege of Raqqa, for example, one from an anonymous user with no published location claiming to be in Raqqa, and the other from a journalist with a published location in Glasgow, who is has a claimed real-world identity which is verified by people I know in the real world, and who claims in his story to have spoken by telephone to (anonymous) people whom he personally knows in Raqqa, which do I trust more? Undoubtedly the latter.

Of course, if the journalist in Glasgow who is known by someone I know endorses the identity of the anonymous user claiming to be in Raqqa, then the trustworthiness of the first story increases sharply.

So we must allow anonymity. We must allow users to hide their location, because unless they can hide their location anonymity is fairly meaningless (in any case, precise location is only really relevant to eye-witness accounts, so a person who allows their location to be published within a 10Km box may be considered more reliable than one who doesn't allow their location to be published at all).

Conclusion

We live in a world in which we have no access to 'objective reality', if such a thing even exists. Instead, we have access to multiple, contested accounts. Nevertheless, there are potential technical mechanisms for helping us to assess the trustworthiness of an account. A news system for the future should build on those mechanisms.

Thursday, 24 August 2017

Challenges in the news environment

|

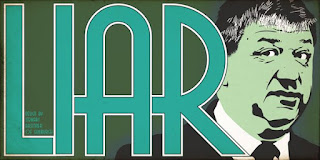

| Alistair Carmichael, who proved that politicians can lie with impunity. Picture by Stewart Bremner |

Time moves on; there are other problems in the news publishing environment which existed then but which are much more starkly apparent to me now; and there are some issues which are authentically new.

Why News Matters

Challenges to the way news media works are essentially challenges to democracy. Without a well-informed public, who understand the issues they are voting on and the potential consequences of their decisions, who have a clear view of the honesty, integrity and competence of their politicians, meaningful democracy becomes impossible.We need to create a media which is fit for - and defends - democracy; this series of essays is an attempt to propose how we can do that.

So what's changed in the past twelve years? Let's start with those changes which have been incremental.

History Repeats Itself

Proprietors have always used media to promote their political interests, and, for at least a century, ownership of media has been concentrated in the hands of a very few, very rich white men whose interests are radically different from those of ordinary folk. That's not new. But over the past few years the power this gives has been used more blatantly and more shamelessly. Furthermore, there was once an assumption that things the media published would be more or less truthful, and that (if they weren't) that was a matter of shame. No longer. Fake News is all too real.Politicians have always lied, back to the beginnings of democracy. But over the past decade we've seen a series of major elections and referenda swung by deliberate mendacity, and, as the Liar Carmichael scandal shows, politicians now lie with complete impunity. Not only are they not shamed into resigning, not only are they not sacked, successful blatant liars like Liar Carmichael, Boris Johnson, David Davis, Liam Fox and, most starkly of all, Donald Trump, are quite often promoted.

Governments have for many years tried to influence opinion in countries abroad, to use influence over public opinion as at least a diplomatic tool, at most a mechanism for regime change. That has been the purpose of the BBC World Service since its inception. But I think we arrogantly thought that as sophisticated democracies we were immune to such manipulation. The greater assertiveness and effectiveness of Russian-owned media over the past few years has clearly shown we're not.

The Shock of the New: Social Media

Other changes have been revolutionary. Most significant has been the rise of social media, and the data mining it enables. Facebook was a year old when I wrote my essay; Twitter didn't yet exist. There had been precursors - I myself had used Usenet since 1986, others had used AOL and bulletin boards. But these all had moderately difficult user interfaces, and predated the mass adoption of the Internet. It was the World Wide Web (1991) that made the Internet accessible to non-technical people, but adoption still took time.Six Degrees, in 1997, was the first recognisably modern social media platform, which tracked users relationships with one another. Social Media has a very strong 'Network Effect' - if your friends are all on a particular social media platform, there's a strong incentive for you to be on the same platform. If you use a different social media platform, you can't communicate with them. Thus social media is in effect a natural monopoly, and this makes the successful social media companies - which means, essentially, Facebook, Facebook and Facebook - immensely powerful.

What makes social media a game changer is that it allows - indeed facilitates - data mining. The opinions and social and psychological profiles of users are the product that the social media companies actually sell to fund their operations.

We've all sort of accepted that Google and Facebook use what they learn from their monitoring of our use of their services to sell us commercial advertising, as an acceptable cost of having free use of their services. It is, in effect, no more than an extension of, and in many ways less intrusive than, the 'commercial breaks' in commercial television broadcasts. We accept that as kind-of OK.

It becomes much more challenging, however, when they market that data to people who will use sophisticated analysis to precisely target (often mendacious) political messages at very precisely defined audiences. There are many worrying aspects to this, but one worrying aspect is that these messages are not public: we cannot challenge the truth of messages targeted at audiences we're not part of, because we don't see the messages. This is quite different from a billboard which is visible to everyone passing, or a television party-political broadcast which is visible to all television watchers.

There's also the related question of how Facebook and Twitter select the items they prioritise in the stream of messages they show us. Journalists say "it's an algorithm", as if that explained everything. Of course it's an algorithm; everything computers do is an algorithm. The question is, what is the algorithm, who controls it, and how we can audit it (we can't) and change it (see above).

The same issue, of course, applies to Google. Google's search engine outcompeted all others (see below) because its ranking algorithm was better - specifically, was much harder to game. The algorithm which was used in Google's early days is now well known, and we believe that the current algorithm is an iterative development on that. But the exact algorithm is secret, and there is a reasonable argument that it has to be secret because if it were not we'd be back to the bad old days of gaming search engines.

Search, in an Internet world, is a vital public service; for the sake of democracy it needs to be reasonably 'objective' and 'fair'. There's a current conspiracy theory that Google is systematically down rating left-wing sites. I don't know whether this is true, but if it is true it is serious; and, whatever the arguments about secrecy, for the sake of democracy we need Google's algorithm, too, to be auditable, so that it can be shown to be fair.

Tony Benn's five famous questions apply just as much to algorithms as to officials:

- What power have you got?

- Where do you get it from?

- In whose interests do you exercise it?

- To whom are you accountable?

- How do we get rid of you?

Facebook and Google need to answer these questions on behalf of their algorithms, of course; but in designing a system to provide the future of news, so do we.

To Everything, There is a Season

There's a lot to worry about in this situation, but there is some brightness to windward: we live in an era of rapid change, and we can, in aggregate, influence that change.Changing how the news media works may not be all that hard, because the old media is caught in a tight financial trap between the rising costs of printing and distribution and the falling revenues from print advertising. Serious thinkers in the conventional media acknowledge that change is necessary and are searching for ways to make it happen.

Creating new technology and a new business model for the news media is the principal objective of this series of essays; but it isn't the only thing that needs to be done, if we are to create an environment in which Democracy can continue to thrive.

Changing how social media works is harder, because of the network effect. To topple Facebook would require a concerted effort by very many people in very many countries across the globe. That's hard. But looking at the history of search engines shows us it isn't impossible.

I was responsible for one of the first 'search engines' back in 1994; Scotland.org was intended to be a directory to all Scottish websites. It was funded the SDA and was manually curated. Yahoo appeared - also as a manually curated service - at about the same time. But AltaVista, at the same time, launched a search engine which was based on an automated spidering of the whole Web (it wasn't the first engine to do this), which, because of its speed and its uncluttered interface, very rapidly became dominant.

Where is AltaVista now? Gone. Google came along with a search engine which was much harder to 'game', and which consequently produced higher-quality search results, and AltaVista died.

Facebook is now a hugely overmighty subject, with enormous political power; but Facebook will die. It will die surprisingly suddenly. It will die when a large enough mass of people find a large enough motivation to switch. How that may be done will be the subject of another essay.

Wednesday, 23 August 2017

How do we pay for search?

[This is taken from a Twitter thread I posted on June 27th. I'm reposting it here now because it's part of supporting argument for a larger project I'm working on about the future of news publishing]

Seriously, folks, how do we want to pay for search? It's a major public service of the Internet age. We all use it. Google (and Bing, and others) provide us with search for free and fund it by showing us adverts and links to their own services.

But search is not free. The effort of cataloging & indexing all the information on the web costs serious compute time. Literally thousands - possibly by now millions - of computers operate 24 hours a day every day just doing that and nothing else. Another vast army of computers sits waiting for our search queries and serving us responses quickly and efficiently. All this costs money: servers, buildings, vast amounts of electricity, bandwidth. It's not free. It's extremely expensive.

Google's implicit contract with us is that we supply them with information about ourselves through what we search for, and also look at the adverts they show us, and in return Google (or Bing, or...) supplies us with an extraordinarily powerful search service.

The EU say it's not OK for Google to show us adverts for their own services (specifically, their price comparison service). Why is it not OK? We all understand the implicit contract. It's always been OK for other media to show adverts for their own stuff. How many trailers for other programmes does the BBC (or ITV, or Sky...) show in a day? You can't watch BBC TV without seeing trails for other BBC services/programmes. The BBC don't show trails for Sky, nor Sky for BBC.

Search is really important. It is important that it should be as far as possible unbiased, neutral, apolitical. Trusted. But Google knows that its whole business is based on trust. It is greatly in its interests to run an impartial search service.

Seriously, folks, how do we want to pay for search? It's a major public service of the Internet age. We all use it. Google (and Bing, and others) provide us with search for free and fund it by showing us adverts and links to their own services.

But search is not free. The effort of cataloging & indexing all the information on the web costs serious compute time. Literally thousands - possibly by now millions - of computers operate 24 hours a day every day just doing that and nothing else. Another vast army of computers sits waiting for our search queries and serving us responses quickly and efficiently. All this costs money: servers, buildings, vast amounts of electricity, bandwidth. It's not free. It's extremely expensive.

Google's implicit contract with us is that we supply them with information about ourselves through what we search for, and also look at the adverts they show us, and in return Google (or Bing, or...) supplies us with an extraordinarily powerful search service.

The EU say it's not OK for Google to show us adverts for their own services (specifically, their price comparison service). Why is it not OK? We all understand the implicit contract. It's always been OK for other media to show adverts for their own stuff. How many trailers for other programmes does the BBC (or ITV, or Sky...) show in a day? You can't watch BBC TV without seeing trails for other BBC services/programmes. The BBC don't show trails for Sky, nor Sky for BBC.

So I don't understand why it's wrong for Google to do this. But if we think it is wrong, how do we want to pay for search? I can perfectly see an argument that search is too important to be entrusted to the private sector, that search ought to be provided by an impartial, apolitical public service - like e.g. a national library - funded out of taxation.

But the Internet is international, so if it was a public sector organisation it would in effect be controlled by the UN. This is an imperfect world, with imperfect institutions. Not all members of the UN are democracies. The Saudis sit on the UN Womens' Rights Commission. Do we want them controlling search?

I am a communist. I inherently trust socialised institutions above private ones. But we all know the implicit contract with Google. I value search; for me, it is worth being exposed to the adverts that Google shows me, and sharing a bit of personal information. But, if we as a society choose, as the EU implies, not to accept that contract, how do we as a society want to pay for search?

Tuesday, 22 August 2017

CollabPRES: Local news for an Internet age

(This is an essay I wrote on December 30th, 2005; it's a dozen years old. Please bear this in mind when reading this; things on the Internet do change awfully fast. I'm republishing it now because it contains a lot of ideas I want to develop over the next few weeks)

The slow death of newsprint

Local newspapers have always depended heavily on members of the community, largely unpaid, writing content. As advertising increasingly migrates to other media and the economic environment for local newspapers gets tighter, this dependency on volunteer contributors can only grow.At the same time, major costs on local papers are printing and distribution. In the long run, local news must move to some form of electronic delivery; but for the present, a significant proportion of the readership is aging and technology-averse, and will continue to prefer flattened dead trees.

Approaches to local Internet news sites

I've been building systems to publish local news on the Internet for six years now. In that time, most local news media have developed some form of Internet presence. Almost without exception, these Internet news sites have been modelled (as the ones I've written have) on the traditional model of a local newspaper or magazine: an editor and some journalists have written all the content, and presented it, static and unalterable, before the waiting public.

I've become less and less convinced by this model. Newer Internet systems in other areas than local news have been exploiting the technology in much more interesting ways. And reading recent essays by other people involved in this game whom I respect, it seems they're thinking the same. So how can we improve this?

PRES

PRES is a web application I built six years ago to serve small news sites. It's reasonably good, stable and reliable, but there's nothing particularly special about PRES, and it stands here merely as an example of a software system for driving a relatively conventional Internet news site. It's just a little bit more sophisticated than a 'blog' engine.PRES revolves around the notion of an editor – who can approve stories; authors – who can contribute stories; subscribers – who are automatically alerted, by email, to new stories in their areas of interest, and who may be able to contribute responses or comments to stories; and general readers,who simply read the stories on the Web. It organises stories into a hierarchy of 'categories' each of which may have different presentation. Within each category one article may be nominated by the editor as the 'lead article', always appearing at the top of the category page. Other articles are listed in chronological order, the most recent first. Only eight 'non-lead' stories are by default shown in any category, so articles 'age out' of the easily navigated part of the website automatically as new articles are added.

PRES also offers flexible presentation and does a number of other useful news-site related things such as automatically generating syndication feeds, automatically integrating Google advertising, and providing NITF (News Industry Text Format) versions of all articles; but all in all it's nothing very special, and it's included here mainly to illustrate one model of providing news.

This model is 'top down'. The editor – a special role - determines what is news, by approving stories. The authors – another special role – collect and write the news. The rest of the community are merely consumers. The problem with this approach is that it requires a significant commitment of time from the editor, particularly, and the authors; and that it isn't particularly sensitive to user interest.

WIKI

A 'wiki' is a collaborative user edited web site. In a classic wiki there are no special roles; every reader is equal and has equal power to edit any part of the web site. This model has been astoundingly successful; very large current wiki projects include Wikipedia, which, at the time of writing after only five years now has over 2 million articles in over 100 languages. The general quality of these articles is very high, and I have come to use Wikipedia to get a first overview about subjects of which I know little. It is, by far, more comprehensive and more up to date than any print encyclopedia; and it is so precisely because it makes it so easy for people who are knowledgeable about a particular subject to contribute both original articles and corrections to existing articles.However, as with all systems, in this strength is its weakness. Wikipedia allows anyone to contribute; it allows anyone to contribute anonymously, or simply by creating an account; and in common with many other web sites it has no means of verifying the identity of the person behind any account. It treats all edits as being equal (with, very exceptionally, administrative overrides). Wikipedia depends, then, on the principle that people are by and large well motivated and honest; and given that most people are by and large well motivated and honest, this works reasonably well.

But some people are not well motivated or honest, and Wikipedia is very vulnerable to malice, sabotage and vandalism, and copes poorly with controversial topics. Particular cases involve the malicious posting of information the author knows to be untrue. In May of this year an anonymous user, since identified, edited an article about an elderly and respected American journalist to suggest that he had been involved in the assassinations of John F and Robert Kennedy. This went uncorrected for several months, and led to substantial controversy about Wikipedia in the US.

Similarly, many articles concern things about which people hold sharply different beliefs. A problem with this is that two groups of editors, with different beliefs, persistently change the article, see-sawing it between two very different texts. Wikipedia's response to this is to lock such articles, and to head them with a warning, 'the neutrality of this article is disputed'. An example here at the time of writing is the article about Abu Bakr, viewed by Sunni Muslims as the legitimate leader of the faithful after the death of Mohamed, and by Shia Muslims as a usurper.

However, these problems are not insurmountable, and, indeed, Wikipedia seems to be coping with them very well by developing its own etiquette and rules of civil society.

Finally, using a WIKI is a little intimidating for the newcomer, since special formatting of the text is needed to make (e.g.) links work.

The Wiki model is beginning to be applied to news, not least by Wikipedia's sister project WikiNews. This doesn't seem to me yet to be working well (see 'Interview with Jimmy Wales' in further reading). Problems include that most contributors are reporting, second hand, information they have gleaned from other news sources; and that attempting to produce one global user-contributed news system is out of scale with the level of commitment yet available, and with the organising capabilities of the existing software.

This doesn't mean, of course, that a local NewsWiki could not be successful; indeed, I believe it could. Local news is by definition what's going on around people; local people are the first-hand sources. And it takes only a relatively small commitment from a relatively small group of people to put local news together.

Karma and Webs of Trust

The problem of who to trust as a contributor is, of course, not unique to a wiki; on the contrary it has been best tackled so far, I believe, by the discussion system Slashcode, developed to power the Slashdot.org discussion site. Slashcode introduces two mechanisms to scoring user-contributed content which are potentially useful to a local news system. The first is 'karma', a general score of the quality of a user's contributions. Trusted users (i.e., to a first approximation, those with high 'karma') are, from time to time, given 'moderation points'. They can spend these points by 'moderating' contributions – marking them as more, or less valuable. The author of a contribution that is marked as valuable is given an increment to his or her karma; the author of a contribution marked down, loses karma. When a new contribution is posted its initial score depends on the karma of its author. This automatic calculation of 'karma' is of course not essential to a karma based system. Karma points could simply be awarded by an administrative process; but it illustrates that automatic karma is possible.The other mechanism is the 'web of trust'. Slashcode's implementation of the web of trust idea is fairly simple and basic: any user can make any other user a 'friend' or a 'foe', and can decide to modify the scores of contributions by friends, friends of friends, foes, and foes of friends. For example, I modify contributions by my 'friends' +3, which tends to bring them to the top of the listing so I'm likely to see them. I modify contributions by 'friends of friends' by +1, so I'm slightly more likely to see them. I modify contributions by 'foes' by -3, so I'm quite unlikely to see them.

Slashdot's web of trust, of course, only operates if a user elects to trust other people, only operates at two steps remove (i.e. friends and friends of friends, but not friends of friends of friends) and is not additive (i.e. If you're a friend of three friends of mine, you aren't any more trusted than if you're a friend of only one friend of mine). Also, I can't qualify the strength of my trust for another user: I can't either say “I trust Andrew 100%, but Bill only 70%”, or say “I trust Andrew 100% when he's talking about agriculture, but only 10% when he's talking about rural transport”.

So to take these issues in turn. There's no reason why there should not be a 'default' web of trust, either maintained by an administrator or maintained automatically. And similarly, there's no reason why an individual's trust relationships should not be maintained at least semi-automatically.

Secondly, trust relationships can be subject specific, and thus webs of trust can be subject specific. If Andrew is highly trusted on agriculture, and Andrew trusts Bill highly on agriculture, then it's highly likely that Bill is trustworthy on agriculture. But if Andrew is highly trusted on agriculture, and Andrew trusts Bill highly on cars, it doesn't necessarily imply that Bill is to be trusted on cars. If a news site is divided into subject specific sections (as most are) it makes sense that the subjects for the trust relationships should be the same as for the sections.

What is news?

So what is news? News is what is true, current and interesting. Specifically it is what is interesting to your readers. Thus it is possible to tune the selection of content in a news-site by treating page reads as voting, and giving more frequently read articles more priority (or, a slightly more sophisticated variant of the same idea, give pages a score based on a formula computed§ from the number of reads and a 'rate this page' scoring input).The problem with a simple voting algorithm is that if you prioritise your front page (or subcategory – 'inner' pages) by reads, then your top story is simply your most read story, and top stories will tend to lock in (since they are what a casual reader sees first). There has to be some mechanism to attract very new stories to the attention of readers, so that they can start to be voted on. And there has to be some mechanism to value more recent reads higher than older ones.

So your front page needs to comprise an ordered list of your currently highest scoring articles, and a list of your 'breaking news' articles – the most recently added to your system. How do you determine an ordering for these recent stories?

They could simply be the most recent N articles submitted. However, there is a risk that the system could be 'spammed' by one contributor submitting large numbers of essentially similar articles. Since without sophisticated text analysis it is difficult to automatically determine whether articles are 'essentially similar' it might be reasonable to suggest that only a highly trusted contributors should be able to have more than one article in the 'breaking news' section at a time.

The next issue is, who should be able to contribute to, and who edit, stories. The wiki experience suggests that the answer to both these things should be 'everyone', with the possible proviso that, to prevent sabotage and vandalism you should probably require that users identify themselves to the system before being allowed to contribute.

As Robin Miller says

“No matter how much I or any other reporter or editor may know about a subject, some of the readers know more. What's more, if you give those readers an easy way to contribute their knowledge to a story, they will.”Consequently, creating and editing new stories should be easy, and available to everyone. Particularly with important, breaking stories, new information may be becoming available all the time, and some new information will become available to people who are not yet trusted contributors. How, then, do you prevent a less well informed but highly opinionated contributor overwriting an article by a highly trusted one?

'Show newer, less trusted versions'

In wikis it is normal to hold all the revisions of an article. What is novel in what I am suggesting here is that rather than by default showing the newest revision of an article, as wikis typically do, by default the system should show the newest revision by the most trusted contributor according to the web of trust of the reader for the subject area the article is in, if (s)he has one, or else according to the default web of trust for that subject. If there are newer revisions in the system, a link should be shown entitled 'show newer, less trusted versions'. Also, when a new revision if a story is added to the system, email should be automatically sent to the most trusted previous contributor to the article according to the default web of trust, and to the sub-editor of the section if there is one, or else to the contributor(s) most trusted in that section.All this means that casual users will always see the most trusted information, but that less casual users will be able to see breaking, not yet trusted edits, and that expert contributors will be alerted to new information so that they can (if they choose) 'endorse' the new revisions and thus make them trusted.

Maintaining the Web of Trust

Whenever a contributor endorses the contribution of another contributor that's a strong indication of trust. Of course, you may think that a particular contribution is valuable without thinking that its author is generally reliable. So your trust for another contributor should not simply be a measure of your recent endorsement of their work. Furthermore we need to provide simple mechanisms for people who are not highly ranked contributors to maintain their own personal web of trust.Fortunately, if we're already thinking of a 'rate this page' control, HTML gives us the rather neat but rarely used image control, a rectangular image which returns the X, Y co-ordinates of where it was clicked. This could easily be used to construct a one-click control which scores 'more trusted/less trusted' on one axis, and 'more interesting/less interesting' on the other.

Design of CollabPRES

CollabPRES is a proposal for a completely new version of PRES with some of the features of a WIKI and an advanced web of trust system. While there will still be a privileged role – an Administrator will be able to create and manage categories (sections) and will be able to remove articles and to remove privileges from other users in exceptional circumstances. An article will not exist as a record in itself but as a collection of revisions. Each revision will be tagged with its creator (a contributor) and with an arbitrary number of endorsers (also contributors). In order to submit or edit an article, or to record an opinion of the trustworthiness of an article, a contributor must first log in and identify themselves to the system. contributors will not be first class users authenticated against the RDBMS but second class users authenticated against the application. There will probably not be a threaded discussion system, as, seeing the article itself is editable, a separate mechanism seems unnecessary.Whether contributors are by default allowed to upload photographs will be an administrative decision for the site administrator. Where contributors are not by default permitted to upload images, the administrator will be able to grant that privilege to particular contributors.

In order to make it easier for unsophisticated users to add and edit stories, it will be possible to upload a pre-prepared text, HTML, OpenOffice, or (ideally, if possible) MSWord file as an alternative to editing text in an HTML textarea control

.

To be successful, CollabPRES must have means of integrating both local and national advertising into the output. At present this paper does not address that need.

Finally, there must still be an interaction between the website and the printed page, because many of the consumers of local news still want hard copy, and will do for at least some years to come.

Whereas in most current local papers the website is at best an adjunct to the printed paper, CollabPRES turns that on its head by generating the layout of the printed paper automatically from the content currently on the website. At some point on press day, the system will generate, using XSL to transform CollabPRES's native XML formats to either postscript, PDF, or whatever SGML format the paper's desktop publishing software uses, the full content of the paper in a ready to print format, ready to be printed to film and exposed onto the litho plate. If the transform has been set up correctly to the paper's house style, there should be no need for any human intervention at all.

Obviously editors may not want to be muscled out of this process and may still want to have the option of some final manual adjustment of layout; but that should no longer be the role of the editor of a local paper in a CollabPRES world. Rather, the role of the editor must be to go out and recruit, encourage and advise volunteer contributors, cover (or employ reporters to cover) those stories which no volunteers are interested in, and monitor the quality of contributions to the system, being the contributor of last resort, automatically 100% trusted, who may tidy up any article.

CollabPRES and the local news enterprise

Technology is not a business plan. Technology is just technology. But technology can support a business plan. Local news media need two things, now. They need to lower their costs. And they need to engage their communities. CollabPRES is designed to support these needs. It provides a mechanism for offloading much of the gathering and authoring of news to community volunteers. It automates much of the editing and prioritisation of news. But it implies a whole new way of working for people in the industry, and the issue of streamlining the flow of advertising from the locality and from national campaigns into the system still needs to be addressed.Inspiration

- PRES - of historical interest only, now.

- Wikipedia

- WikiNews (see also interview with Jimmy Wales, founder of WikiMedia)

- Robin Miller's essay 'A Recipe for Newspaper Survival in the Internet Age'

Subscribe to:

Posts (Atom)

The fool on the hill by Simon Brooke is licensed under a Creative Commons Attribution-ShareAlike 3.0 Unported License